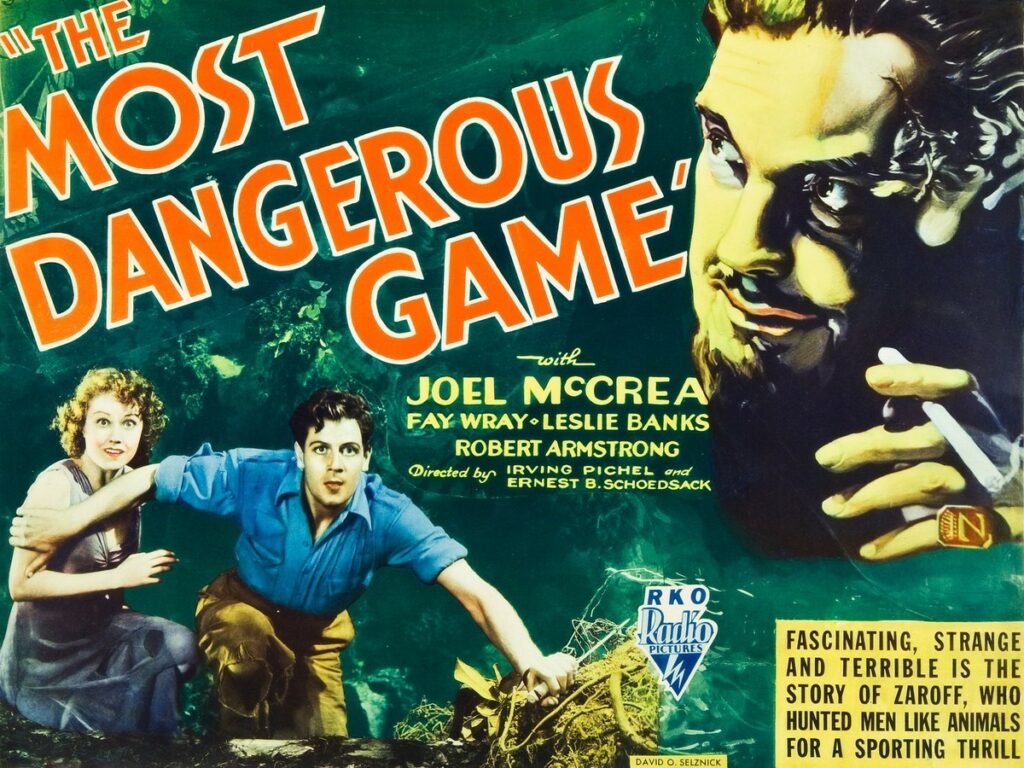

In the short story “The Most Dangerous Game” by Richard Connell (and the movies based on the story), a big game hunter traps humans on an island because he is bored with hunting animals and wants, instead, to hunt “the most dangerous game,” humans.

Well, with healthcare fraud, we have been “hunting” the misdeeds of humans for decades. While this has never been boring, we have had to evolve our tools and approaches to keep up with the ever-evolving ways in which fraud, waste, and abuse can be perpetrated.

In recent years, months, and days, the newest arrow in the quiver is artificial intelligence. Companies are practically climbing over each other to create the latest software tool that utilizes artificial intelligence to ferret out fraud. Whether that tool is used in data mining, predictive analytics, or medical review, everyone is getting on the bandwagon. Like in “I Robot” (or any futuristic movie featuring the use of robots or artificial intelligence – see “2001: A Space Odyssey”), the tools we use can be turned against us.

In the same way that artificial intelligence can be used to improve program integrity, it can be used to facilitate and disguise the fraud, waste, and abuse we are fighting. Social media posts videos where providers are sharing how to use AI to code, file appeals, and create documentation. While these uses can be benign, if used for illicit purposes, AI’s ability to create documentation that supports claims (whether the actual service took place or not) has a direct impact on the scope of potential fraud, how it can be detected, and on how medical reviews need to be conducted differently.

And the opportunities do not stop there. Device manufacturers can utilize AI to create better sales brochures and advertisements. While this can help with access to care, it can also be used to sell more equipment to providers by emphasizing the revenues that can be generated through its use – to include AI-created recommendations for the codes to use to bill for the devices to maximize revenue.

So, like we have seen so many times in the history of fighting healthcare fraud, the fraud fighters must evolve. Our use of AI has caused a counterpunch. Now we must step back and see what it is we must do to continue the hunt given these new factors. There has been recent discussion about Reverse ChatGPT – a tool that detects the use of AI. A tool such as ZeroGPT has been used (with qualified success) to detect if a student’s submission involved the use of AI.

Similar tools must be honed for healthcare program integrity. A tool that detects if artificial intelligence was used to generate a medical record may become a necessary first step in conducting any clinical review. The same would be true to detect AI-generated appeal filings, enrollment forms, or NPI registrations.

Like all tools, AI can be used for good or ill. Our job as a fraud hunter is to not only use the tools but also to anticipate how others with ill intent may utilize them and then adapt and evolve to address those issues before or as they arise. As the fraud gets more sophisticated, we need to stay one step ahead and create the sophisticated tools needed to stay ahead in the most dangerous game.

Final Thoughts

- Artificial Intelligence is here to stay

- Its program integrity possibilities are endless

- Clinical review, data analysis, predictive analytics

- But – it also can be used to help commit fraud

- AI generated fake progress notes

- Better device/drug sales pitches

- Templated appeal narratives

- The Fraud Hunter has to evolve

- New approaches

- New weapons

Recent Comments